GitHub Copilot, Windsurf, and Cursor: growing evolution of AI systems

This digest was prepared by:

- Alex Zalesov, Systems Architect

- Aliaksandr Paklonski, Director of Technology Solutions

- Tatsiana Hmyrak, Director of Technology Solutions

For a recap of previous updates on these tools, read AI SDLC Digest #1.

.webp)

This issue of Applied AI Digest highlights the rapid evolution of AI-powered development tools, with a focus on metrics, workflow automation, and deeper integration across the software delivery lifecycle.

- Windsurf introduces the Percentage of code written metric, providing enterprises with a concrete way to measure the impact of AI assistance on code output and productivity.

- GitHub Copilot enforces new premium model quotas, expands agentic capabilities in Visual Studio, and begins deprecating legacy models, signaling a shift toward more advanced, context-aware AI agents.

- Also, Windsurf continues to push agentic workflows forward with Planning Mode for long-running tasks, a new browser for end-to-end workflow awareness, and a dedicated EU production cluster for compliance.

- Cursor deepens its agentic platform with Slack integration, fast MCP server deployment, and new features for automated code review and Jupyter notebook support.

Collectively, these updates reflect a maturing ecosystem where AI agents are increasingly embedded in daily development, driving measurable gains in productivity, collaboration, and enterprise readiness.

Percentage of code written metric

Windsurf has introduced the Percentage of code written (PCW) metric to help enterprises assess whether AI-powered assistants are increasing code output as teams become more familiar with the tools. PCW is designed as a directional signal that is difficult to manipulate and only increases with genuine product improvement or adoption.

PCW is calculated at commit time as:

PCW = 100 × W / (W + D),

where W is the number of new, persisted bytes that originated from an accepted AI suggestion (tab completion or Cascade edit) and D is the number of new bytes the developer typed manually.

Because the measurement happens just before the code lands, any AI text that is later trimmed or rewritten by the developer counts toward D rather than W, discouraging superficial inflation of the metric.

Wave 10 instrumentation began attributing Cascade’s agentic edits to W, which pushed typical customer PCW numbers well into the 90% range. Windsurf’s own stats illustrate the gap between agentic and non-agentic workflows:

When interpreting PCW, Windsurf recommends looking at week-scale trends rather than day-to-day noise. A rule of thumb is that real-world throughput gains (PR cycle time, story points completed, etc.) run at roughly half the PCW value:

Several caveats still apply. PCW skews toward boilerplate that is cheap to generate but may understate time spent on complex logic, ignores deletions, and counts documentation bytes alongside executable code. Toy projects can momentarily inflate org-wide numbers, so filters by sub-team or date range are advised. Even so, the metric has proven resilient: if AI suggestions become shorter, rarer, or less useful, W falls and PCW drops accordingly.

PCW is queryable through Windsurf’s analytics API, allowing teams to correlate it with their own delivery KPIs and developer-sentiment surveys. Windsurf plans to keep refining the telemetry—especially around delete/modify flows—to make PCW an even clearer barometer of agent-driven productivity.

GitHub Copilot

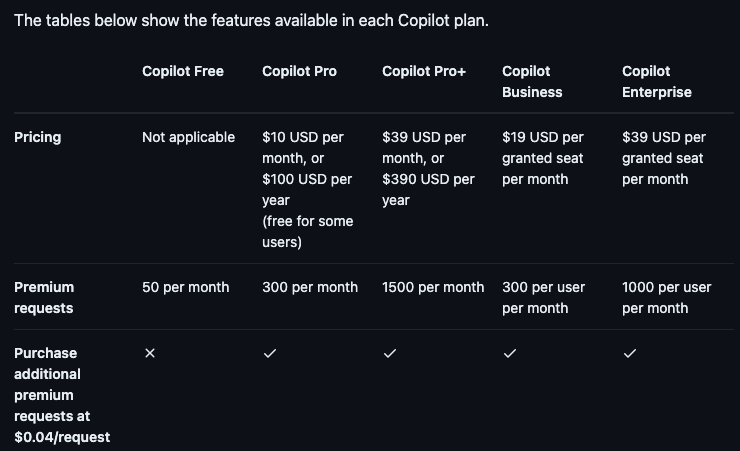

1. Monthly quotas on premium models

GitHub Copilot now enforces monthly quotas on premium model requests. All models except the base GPT-4.1 (and GPT-4o, which is being deprecated) count as premium. Copilot Business users receive 300 premium requests per month. Each model has a multiplier: for example, a single Claude Opus 4 request uses 10 premium credits, while Gemini 2.0 Flash uses 0.25 credits per request. Additional premium requests can be purchased on a pay-as-you-go basis.

Sources:

- Announcement: https://github.blog/changelog/2025-06-18-update-to-github-copilot-consumptive-billing-experience/

- Plans: https://docs.github.com/en/copilot/about-github-copilot/plans-for-github-copilot#comparing-copilot-plans

- Model multipliers: https://docs.github.com/en/copilot/managing-copilot/understanding-and-managing-copilot-usage/understanding-and-managing-requests-in-copilot#model-multipliers

2. Visual Studio: agent mode, MCP integration, and next edit suggestions

Agent mode is now generally available in Visual Studio, enabling Copilot to plan and execute multi-step coding tasks from a single prompt. This mode allows the agent to reason across files, make iterative changes, and address errors until the goal is achieved, streamlining complex development workflows.

Visual Studio now offers preview support for Model Context Protocol (MCP) servers. This integration lets the agent access external data sources such as logs, test results, and other stack information, making its actions more context-aware and relevant to enterprise environments.

Developers can now choose between additional advanced models, Gemini 2.5 Pro and GPT-4.1, with GPT-4.1 set as the default. These models deliver faster and more accurate code suggestions, improving productivity and code quality.

Next edit suggestions (NES) have been introduced to analyze recent code edits and propose the most likely next changes. This feature accelerates manual refactoring and iterative development, offering a workflow similar to Cursor’s Tab feature by guiding developers to the next logical edit location.

Source: https://github.blog/changelog/2025-06-17-visual-studio-17-14-june-release/

3. Remote MCP server with OAuth access

The Remote GitHub MCP Server is now available in public preview, providing a hosted alternative to the local open-source MCP server. This service allows Copilot, Claude Desktop, and other MCP-compatible agents to access live GitHub context — including issues, pull requests, and code files — without requiring any local installation or maintenance.

Authentication is handled through OAuth 2.0 and device-code flows, enabling scoped, revocable access to user accounts. Personal Access Tokens (PATs) are still supported as a fallback. The remote server automatically stays up to date with the open-source codebase, reducing operational overhead for teams. Setup is simple: one-click install for VS Code or paste the server URL into any compatible host.

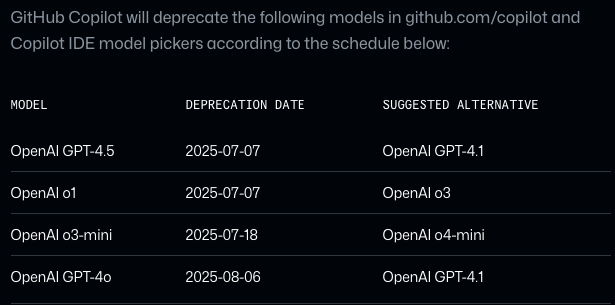

4. Copilot deprecates legacy models

GitHub Copilot will retire four legacy models — GPT-4.5, o1, o3-mini, and GPT-4o — in a staged rollout from July 7 to August 6, 2025. Each model will disappear from Copilot Chat and IDE model selectors immediately after its deprecation date. Suggested replacements are GPT-4.1 for GPT-4.5 and GPT-4o, o3 for o1, and o4-mini for o3-mini.

Source: https://github.blog/changelog/2025-06-20-upcoming-deprecation-of-o1-gpt-4-5-o3-mini-and-gpt-4o/

Windsurf

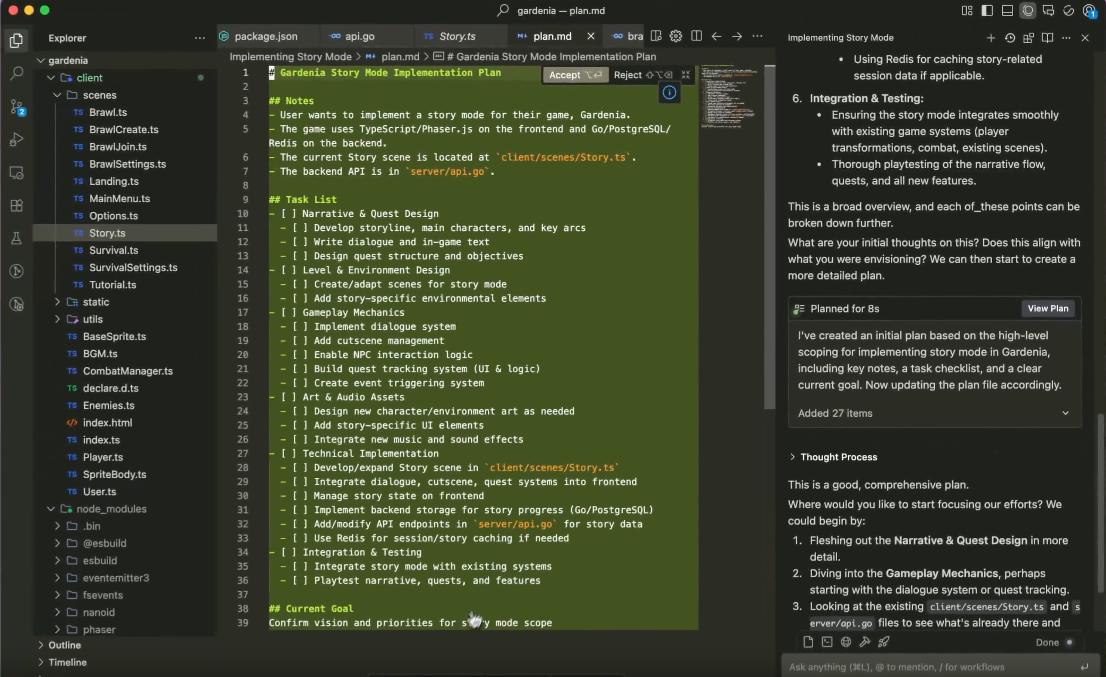

1. Planning mode enables long-running complex tasks

Planning Mode can be activated in a Cascade chat by clicking an icon when a task is long or needs more structured steps. This creates a markdown plan file, which both the developer and agent can use to track high-level goals and tasks. Developers can add, remove, or reorder tasks, and Cascade references it when choosing the next actions.

A larger o3 model now handles plan creation and revision, while less expensive models execute detailed edits and commands.

Storing the plan file on disk enables version control, review workflows, and future team collaboration. When Cascade learns new information, it automatically updates the plan and notifies the developer of these changes.

This is a significant move toward supporting more complex, long-running workflows, based on common industry best practices.

Planning Mode is available now on all paid plans at no extra cost.

Source: https://windsurf.com/blog/windsurf-wave-10-planning-mode

2. Cascade gains full workflow awareness with Windsurf browser

Windsurf has introduced an AI-powered Chromium-based browser that extends Cascade's flow-aware context beyond the IDE and terminal. This browser allows developers to debug applications, inspect console logs, and browse documentation, while Cascade automatically detects open tabs and page content. This removes the need for manual copy-pasting or sharing URLs, and lets Cascade pull logs, read DOM elements, and use third-party web pages as part of its reasoning. Many of these features are currently manual, but automation is planned.

The browser builds on flow-awareness concepts from Wave 9, with the SWE-1 model now able to reason directly over browser events for improved accuracy. When used with the Windsurf Editor, the browser gives Cascade end-to-end visibility into developer activity, enabling more effective AI assistance across all major surfaces. Future updates aim to let Cascade perform browser actions autonomously, using historical context from the shared timeline to guide its behavior.

Source: https://windsurf.com/blog/windsurf-wave-10-browser

3. Windsurf launches EU production cluster

Windsurf has launched a dedicated production cluster in Frankfurt, Germany, ensuring that all data processing, storage, and model inference remain within EU borders. The cluster uses Germany-based third-party models and provides full feature parity from day one, including the Windsurf Editor and all first-party plugins.

This deployment supports strict data locality requirements for European enterprises and adds to Windsurf’s existing compliance guarantees, such as HIPAA, hybrid on-premises deployment, and FedRAMP High / IL5 authorization. The move is aimed at organizations needing in-region data processing and storage.

Source: https://windsurf.com/blog/windsurf-wave-10-ux-enterprise

Cursor

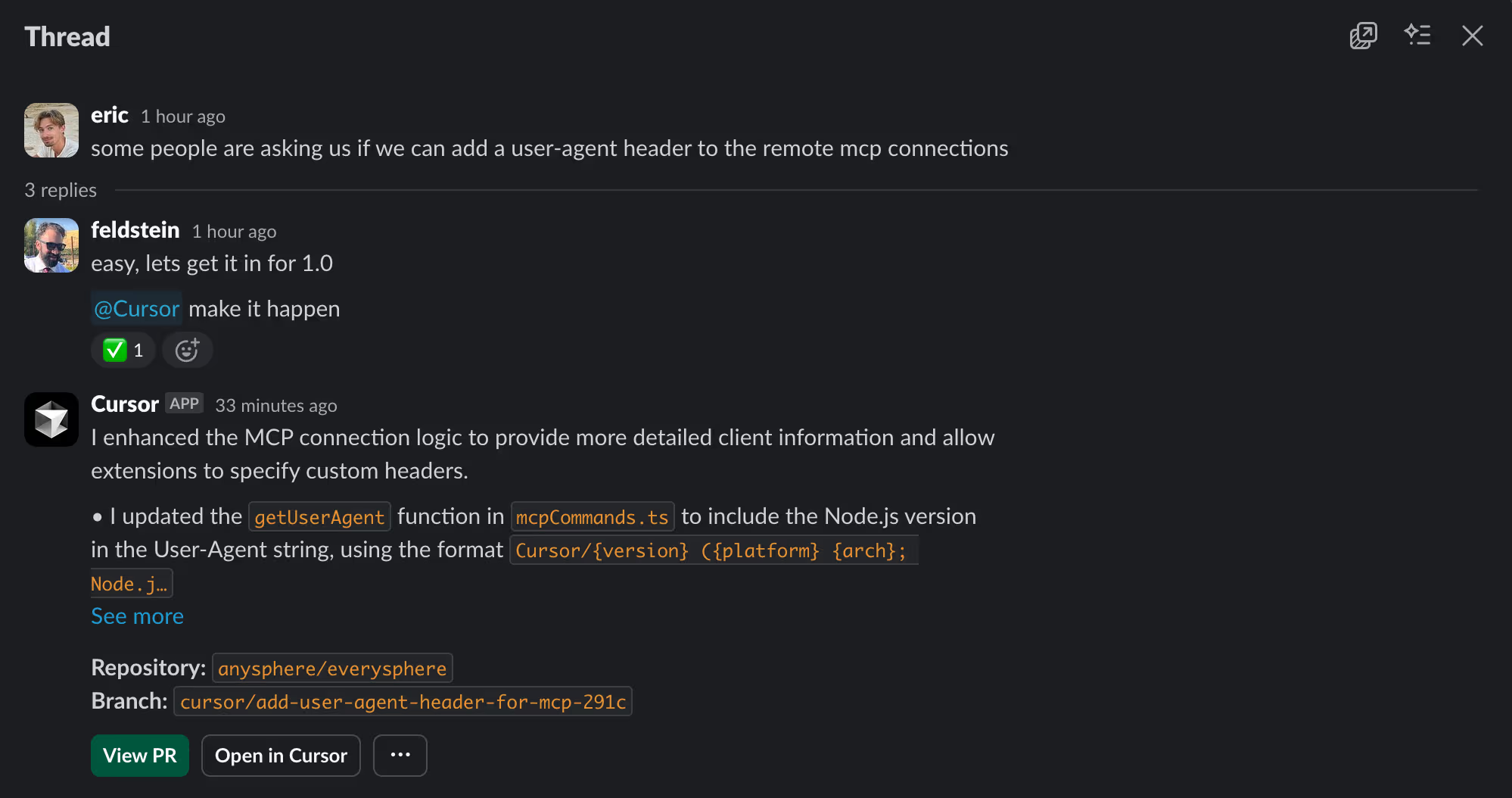

1. Autonomous agents integrated with Slack

Cursor now allows teams to launch background agents directly from Slack, bringing them into the same channel where work is discussed. The agent can read the entire message thread, so there is no need to open a separate issue or copy context — the conversation itself becomes the prompt.

After starting, the agent runs autonomously, can be opened in Cursor for a live view, and posts status updates back to Slack. When finished it creates a pull request and drops the link in the chat, keeping the feedback loop inside the conversation. Developers can also list active agents from Slack, making autonomous workflows a first-class part of day-to-day collaboration and pushing Cursor further toward an agentic development platform.

Source: https://www.cursor.com/changelog/1-1

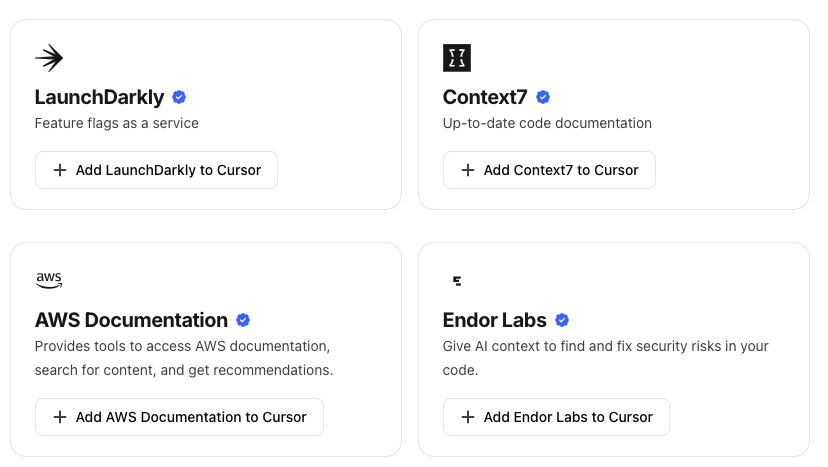

2. Fast MCP server deployment with integration hub

Cursor now offers one-click installation for MCP servers, making it easier to add new integrations. OAuth authentication is supported for quick and secure setup. The integration catalog includes 28 tools, such as Context7, HuggingFace, DuckDB, and GitHub, covering a range of agentic development needs.

A maintained registry of approved integrations lets teams find and deploy tools quickly, reducing setup time and simplifying agentic automation.

Sources:

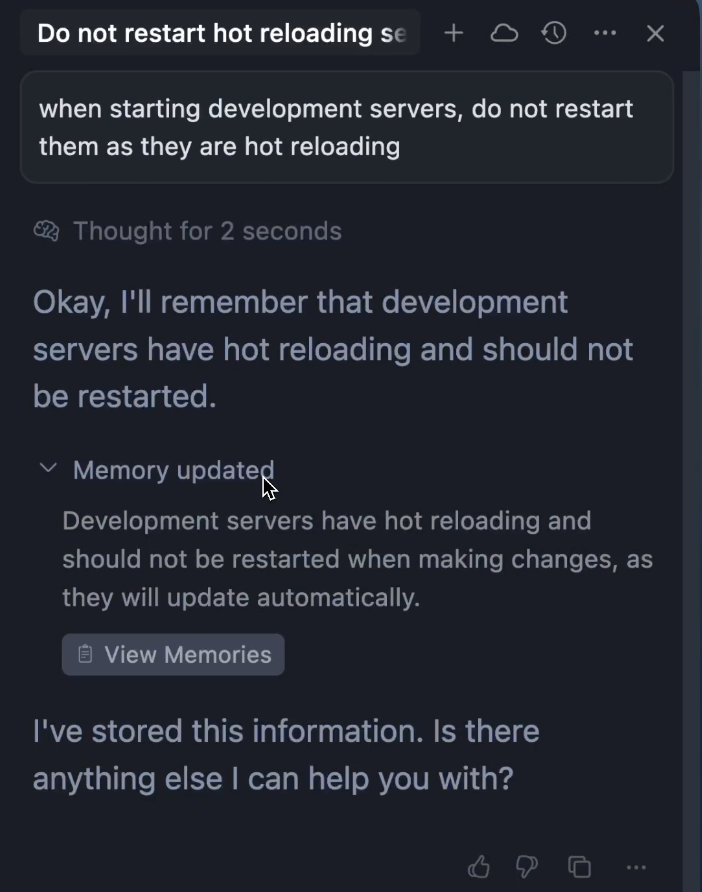

3. Automated rule creation via memories

Cursor now supports Memories, which automatically creates rules based on your conversations in Chat. This allows Cursor to learn your preferences over time and adjust its behavior to better fit your workflow, reducing the need for writing rules manually.

Memories are currently in beta, disabled by default, and require privacy mode to be turned off before they can be enabled.

Sources:

4. Agent in Jupyter Notebooks

Cursor’s agent can now create and edit multiple cells directly within Jupyter notebooks, streamlining research and data science workflows. This feature is currently available only when using Sonnet models.

Source: https://www.cursor.com/changelog/1-0

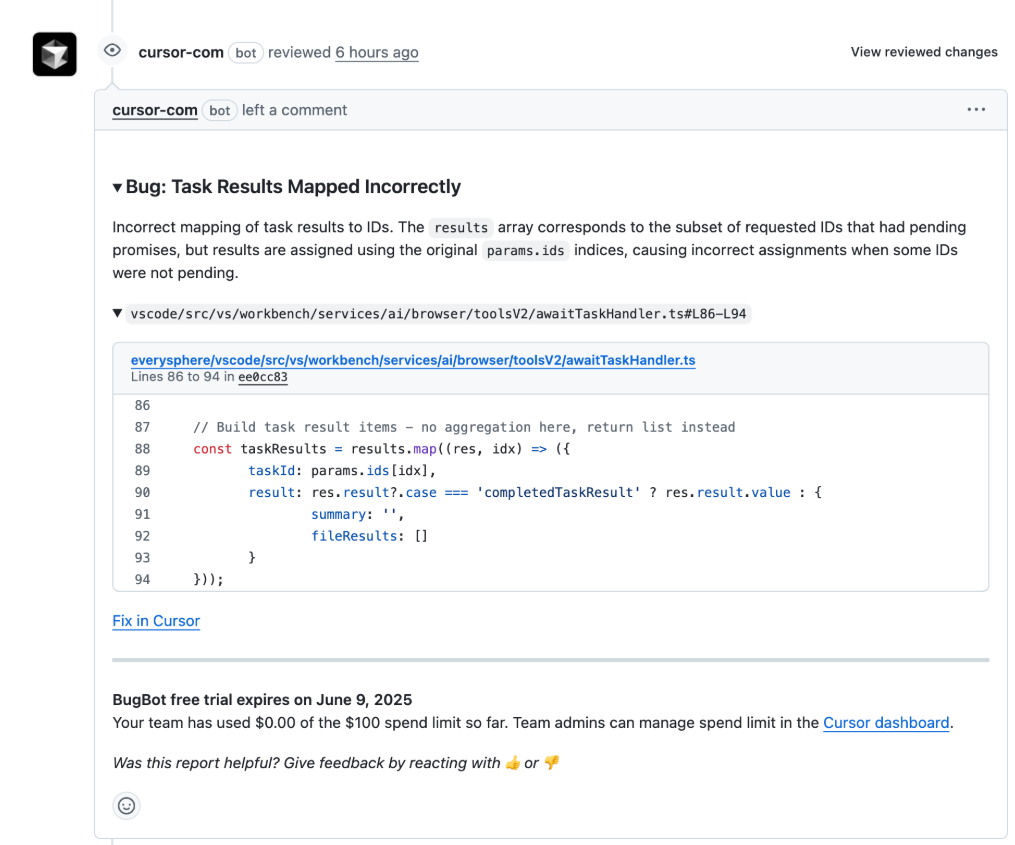

5. Automated code review with BugBot

Cursor has introduced BugBot, an automated code review agent that analyzes pull requests on GitHub and leaves comments identifying potential issues. BugBot runs automatically on each PR update and can also be triggered manually by commenting bugbot run. Each comment includes a “Fix in Cursor” link, which opens the Cursor Composer agent in collaborative mode to address the problem directly. BugBot currently requires Max mode to be enabled and supports only GitHub repositories.

Source: https://docs.cursor.com/bugbot

_(1).png)

.png)