GPT-5, multi-cloud AI, and next-gen developer tools: key updates from OpenAI, Anthropic, GitHub, and Cursor

EPAM AI SDLC experts explore how GPT-5’s routed system, multi-cloud GPT-OSS deployments, and new developer tools in Copilot, VS Code, and Cursor are shaping the future of autonomous coding and AI-driven workflows.

This digest was prepared by:

- Alex Zalesov, Systems Architect

- Tasiana Hmyrak, Director of Technology Solutions

.webp)

This issue explores the latest advancements in AI infrastructure and tooling, highlighting key updates that are shaping the future of autonomous coding agents:

- OpenAI positions GPT-5 as a routed system, not a single model and the release is already landing in Copilot and Cursor.

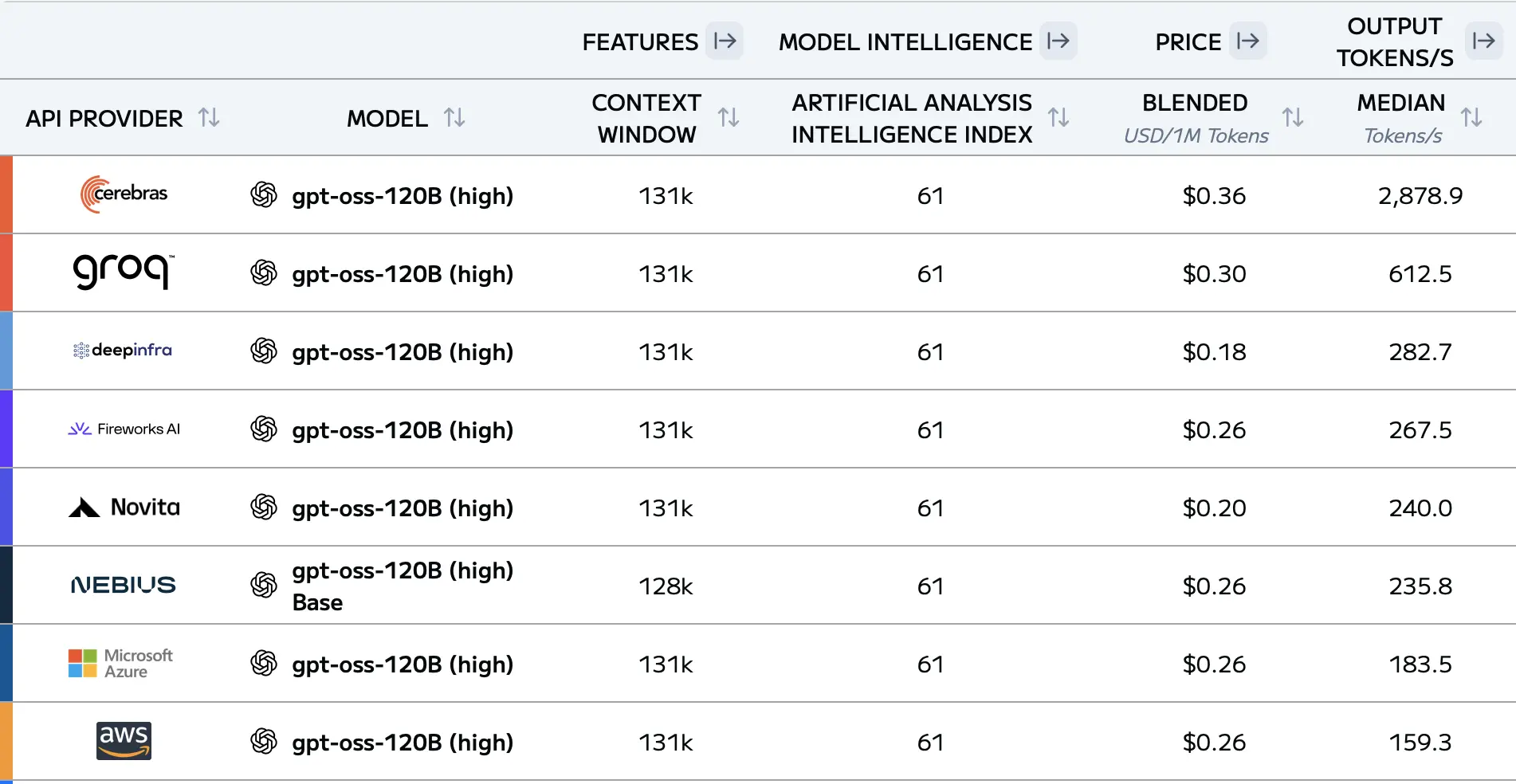

- Open models go multi-cloud: gpt-oss-120b and gpt-oss-20b are now deployable on AWS and GCP. Cerebras, a separate inference provider, can serve gpt-oss-120b at ~3 000 tokens/s — a distinctive speed advantage for autonomous agents that need low latency while keeping data local.

- On the tooling side, GitHub and VS Code add agent-governance features — per-agent tool allowlists, task-plan visibility, checkpoints, system notifications, Git worktrees, and path-scoped instructions. Copilot trims its model lineup (GPT-4o removed) and previews Opus 4.1 in Ask-only mode. Cursor focuses on steerability, observability, and native terminal support, and ships a multi-IDE CLI.

GPT-5: a system, not a single model

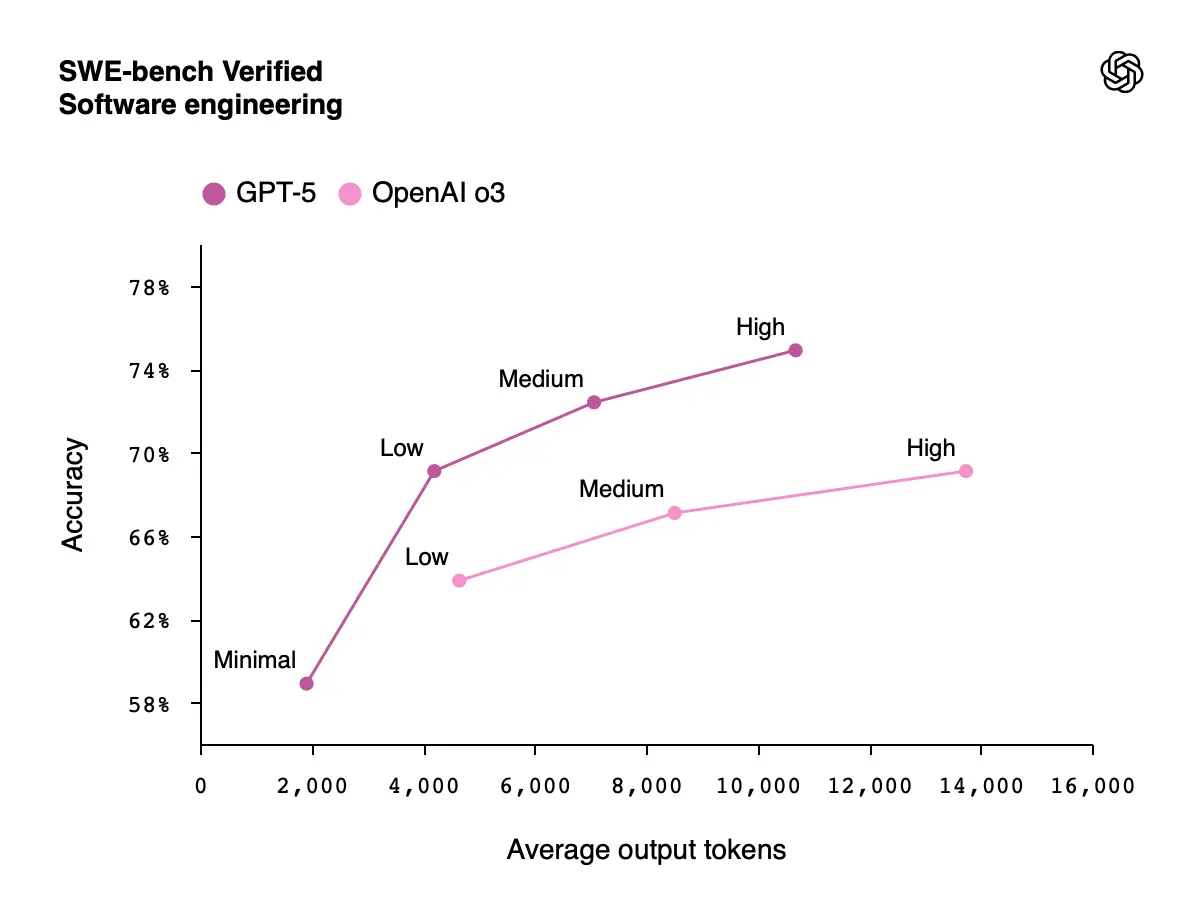

OpenAI framed GPT-5 as a system rather than a monolithic model. It combines a low-latency "smart and fast" model for most queries, a "deeper reasoning" model for complex tasks, and a real-time router that dispatches between them based on conversation type, complexity, tool needs, and explicit intent.

Table: model progressions (previous → GPT-5)

| Previous model | GPT-5 model |

|---|---|

| OpenAI o4-mini | gpt-5-thinking-mini |

| OpenAI o3 Pro | gpt-5-thinking-pro |

| OpenAI o3 | gpt-5-thinking |

| GPT-4o-mini | gpt-5-main-mini |

| GPT-4o | gpt-5-main |

| GPT-4.1-nano | gpt-5-thinking-nano |

GPT-OSS goes multi-cloud

OpenAI’s GPT-OSS models are now available on both AWS and GCP in addition to Azure — the first time OpenAI models can be deployed across both clouds. If your application runs on AWS, you can select models from Anthropic, OpenAI, or open-weights models. On GCP, you gain access to all major model providers. For enterprise teams, this expands deployment flexibility and reduces lock-in when standardizing AI across environments.

Cerebras is an inference service provider that builds custom ASIC-based wafer-scale systems to accelerate LLM inference. Historically, because it did not produce its own models, Cerebras primarily served open-weight models. With the GPT-OSS model family, Cerebras now runs OpenAI’s gpt-oss-120B at world‑record speeds of about 3,000 tokens/second on the Cerebras AI Inference Cloud, materially lowering end-to-end latency for agentic coding workflows.

This level of speed isn’t necessary for chat interfaces, since humans can only read about 15 tokens per second. It also doesn’t benefit collaborative agents much, as they’re limited by the time it takes for a human to approve actions. The real advantage is for autonomous coding agents that can take on a task and complete it end to end without human intervention.

GPT-OSS 20B also enables a true local coding setup. Previously, achieving strong coding quality typically required cloud inference, sending repository data to a provider and back. With GPT-OSS 20B, teams can run inference locally with good results and keep codebases local. The trade-off remains: prioritize data isolation and local processing with slightly reduced intelligence, or use frontier models for peak coding quality while accepting cloud-based inference.

Sources:

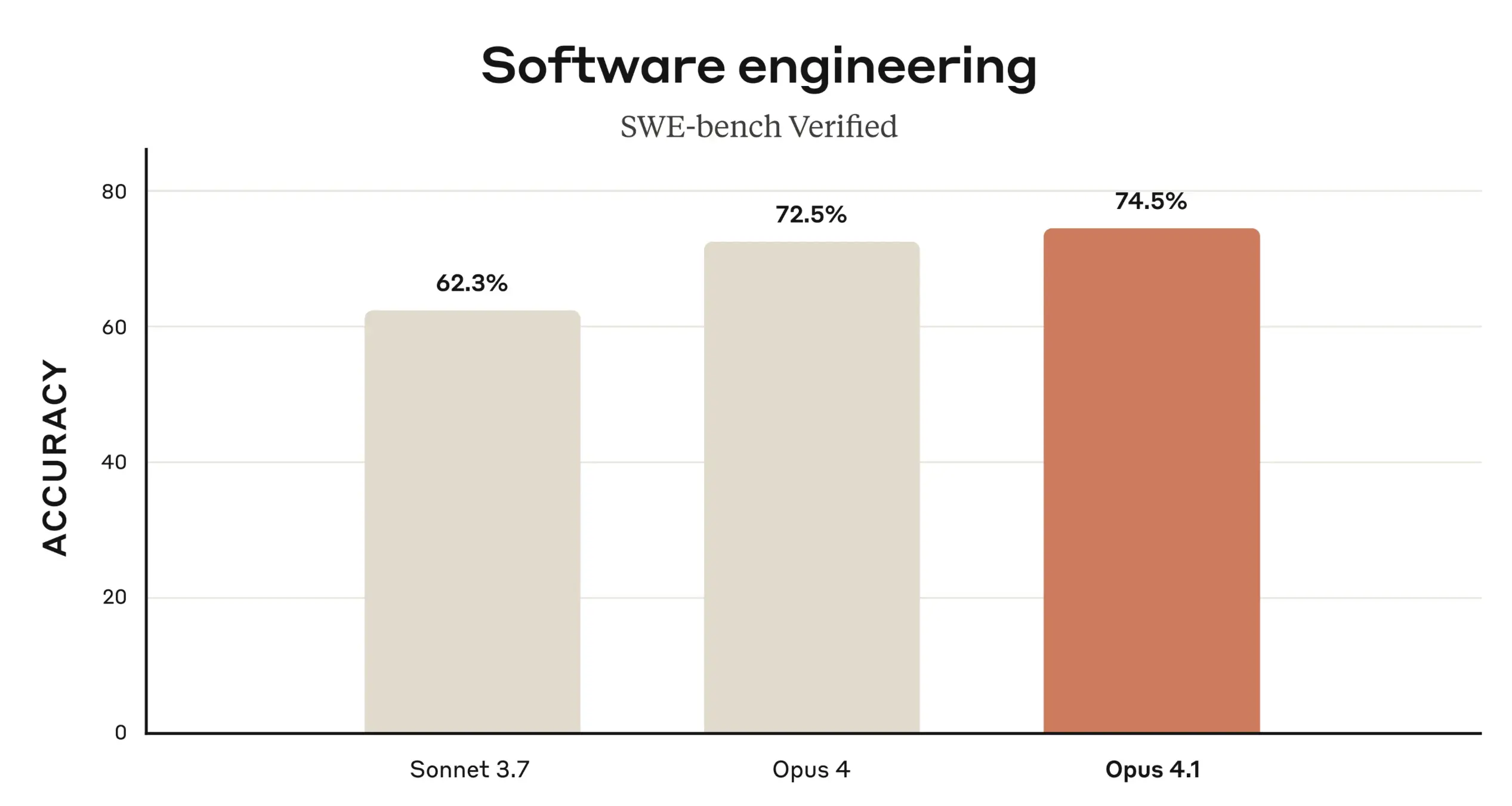

Anthropic upgrades Opus to v4.1

Anthropic has released an incremental update to the Opus model, moving from version 4.0 to 4.1. Performance on benchmarks is similar or slightly improved compared to the previous version. Pricing remains unchanged. The main benefits are increased precision and reliability, which support more complex and longer agentic workflows.

Source: https://www.anthropic.com/news/claude-opus-4-1

GitHub

1. VS Code July release

GPT-5 Public Preview

OpenAI’s latest frontier model, GPT-5, is rolling out in Copilot. Copilot Enterprise and Business administrators must opt in by enabling the new GPT-5 policy in Copilot settings.

Chat Checkpoints

Checkpoints allow you to restore different states of your chat conversations. You can revert edits and return to specific points in your chat. Selecting a checkpoint in VS Code reverts both workspace changes and chat history to that point.

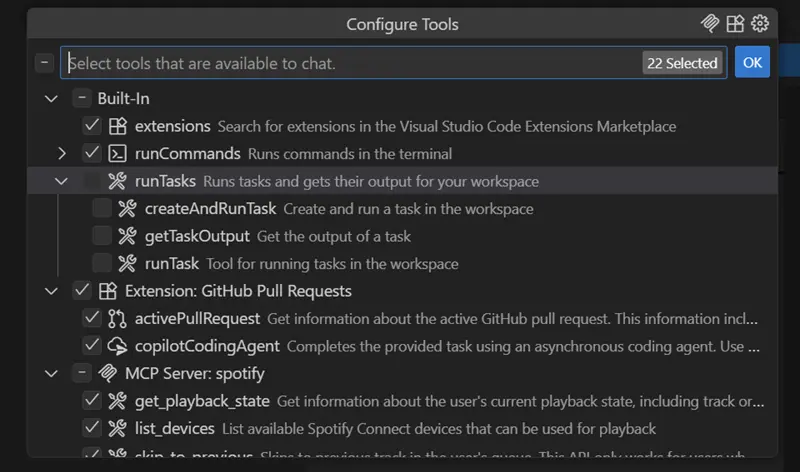

Interactive Tool Picker

Previously, the agent had access to all built-in VS Code tools and those exported by enabled MCP servers. Now, you have fine-grained control over which tools a specific agent can use. A new drop-down menu with a tree structure allows you to enable or disable individual tools. This reduces context window usage by excluding irrelevant tools and increases accuracy by letting the agent choose from a shorter list.

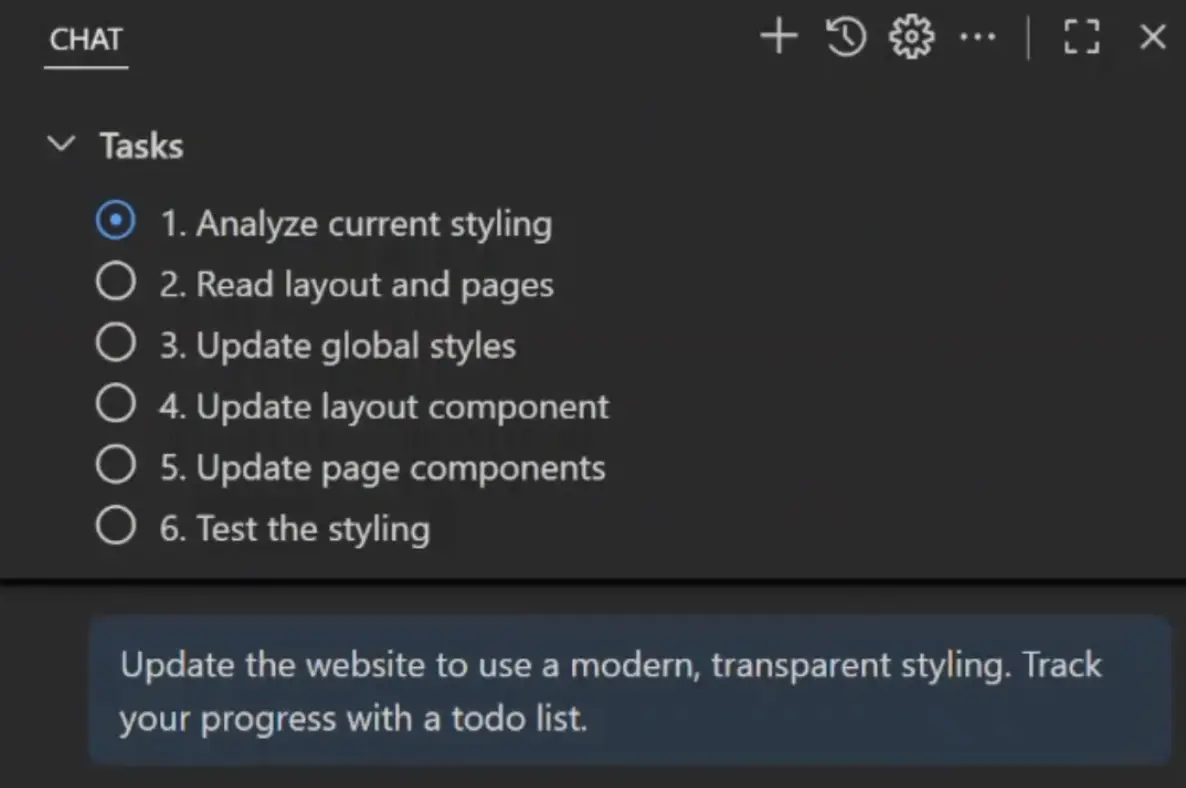

Task Lists

The agent decomposes assigned tasks into actionable steps and executes them sequentially. You can now view these steps after the agent finishes planning, and the task list updates as the agent executes the plan.

System Notifications for User Approval

Agents may run for extended periods and sometimes require user approval to execute tools. VS Code now sends a system notification when your input is needed, allowing you to approve or decline the action and then continue with your work.

Currently, you need to switch to VS Code to approve or decline actions after receiving a notification. In future versions, you’ll be able to take action directly from the notification.

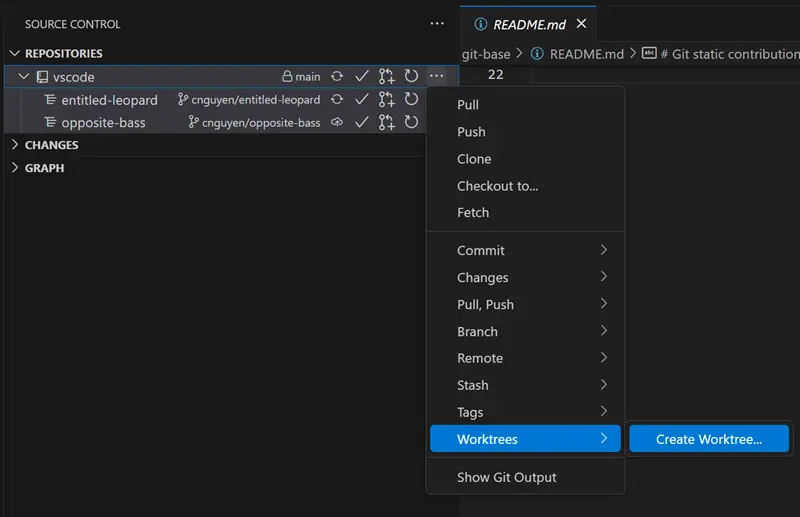

Worktrees Support in VCS

VS Code now supports git worktrees in the Source Control Repositories view. This allows you to have several agents working in parallel on a single codebase by checking out separate branches to the same working copy. You can list available worktrees and open one as needed.

Start Coding Agent from the Chat

You can now manage a coding agent session from a dedicated chat editor. This allows you to follow the progress of the coding agent, provide follow-up instructions, and view the agent's responses in the same editor.

You can now view all running agent sessions (local and remote) in a single view.

This improves integration with autonomous coding agents and increases their steerability.

Source: https://code.visualstudio.com/updates/v1_103

2. GPT-4o removed from Copilot Chat

Starting 6 Aug 2025, GPT-4o is no longer available in Copilot Chat; GPT-4.1 is now the default. Code completions still use GPT-4o. Teams relying on GPT-4o-specific behavior should retest prompts.

Source: https://github.blog/changelog/2025-08-06-deprecation-of-gpt-4o-in-copilot-chat/

3. Opus 4.1 preview in Copilot

Anthropic has released Opus 4.1, an incremental update to its largest model. It is available in VS Code for Enterprise and Pro+ plans; Business licenses are excluded.

Opus 4.1 excels at agentic tasks, but Copilot offers it only in Ask-only mode, limiting its benefits for autonomous workflows.

4. Granular path-based agent instructions

Copilot Coding Agent now supports path-scoped instructions. Place .instructions.md files under .github/instructions with YAML front matter that targets specific files or directories. This enables directory-level guidance without bloating prompts.

Cursor

1. Cursor update: steerability and control

Agent Steerability During Sessions

The agent can run for extended periods and is now most steerable during these times. You can use Option+Enter to queue a message, which the agent will process at the next suitable point, typically after a tool call. Alternatively, use Cmd+Enter to send a message immediately, interrupting the agent's current activity. This allows you to guide the process without stopping and restarting the session.

Sidebar Panel Shows All Agents

You can now view all agents—both autonomous agents running remotely and collaborative agents running locally—in a single sidebar panel.

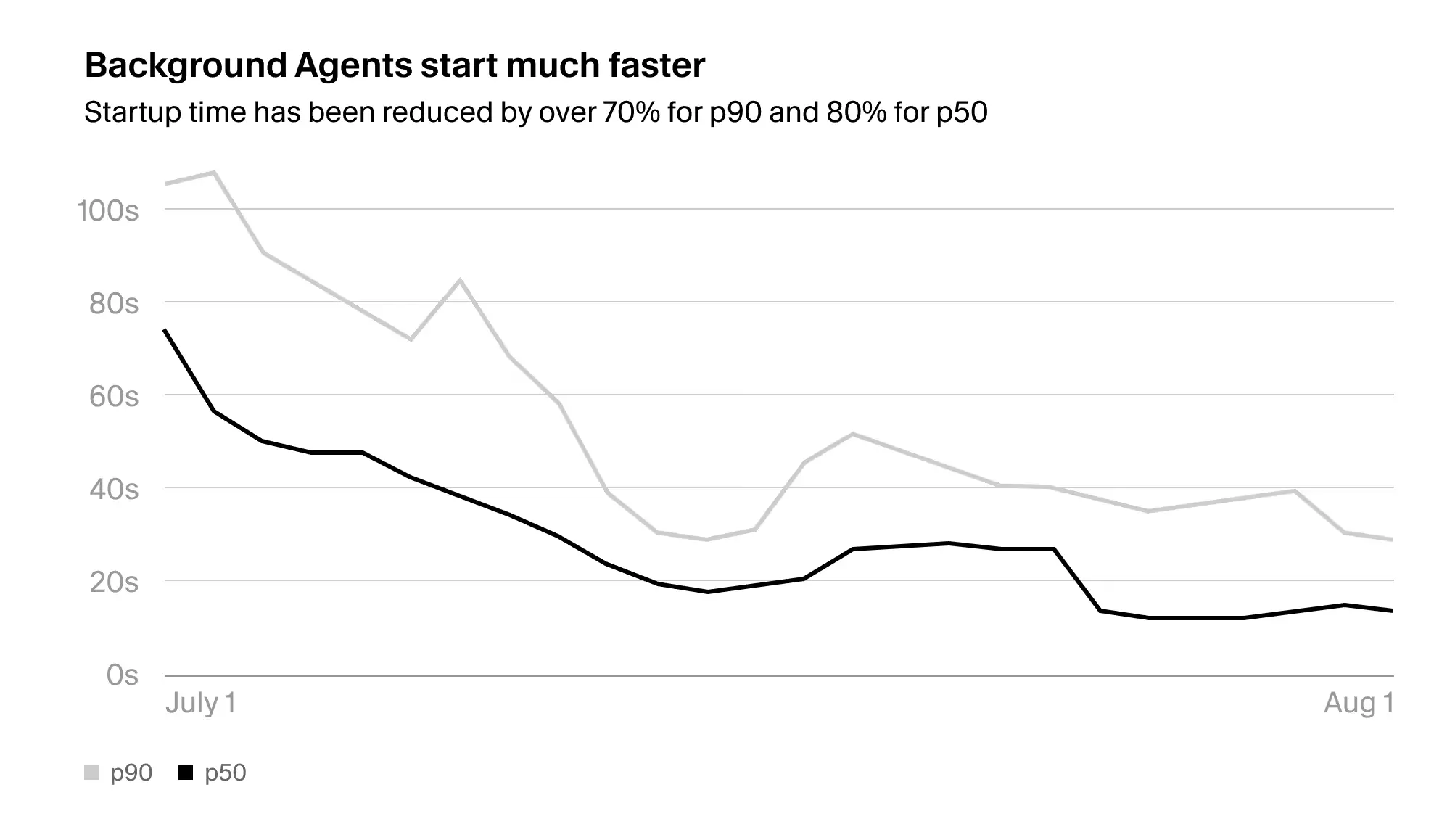

Faster agent startup time

Background agents startup time substantially improved - from approximately 80s to 20s.

Agents Can Use Native Terminal

Agents can now use your native terminal. Previously, commands were run in the chat window, so you could not see the commands and their output in your usual terminal.

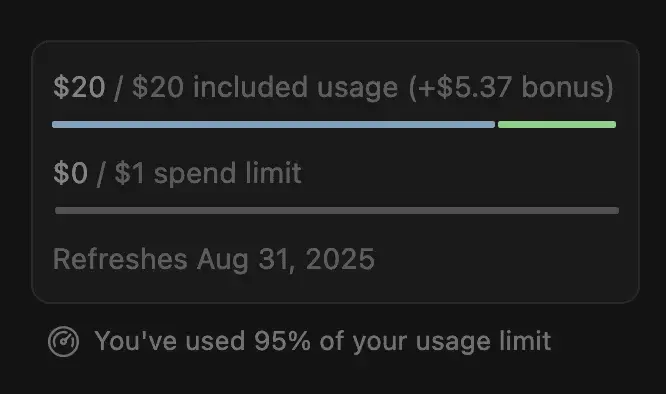

Observability: Context and Usage Limits

There are two improvements to observability. You can now see the context usage for a specific chat session, as well as track how much of your overall usage limit you have spent in the current month.

Source: https://cursor.com/changelog/1-4

2. Cursor CLI: multi-IDE support

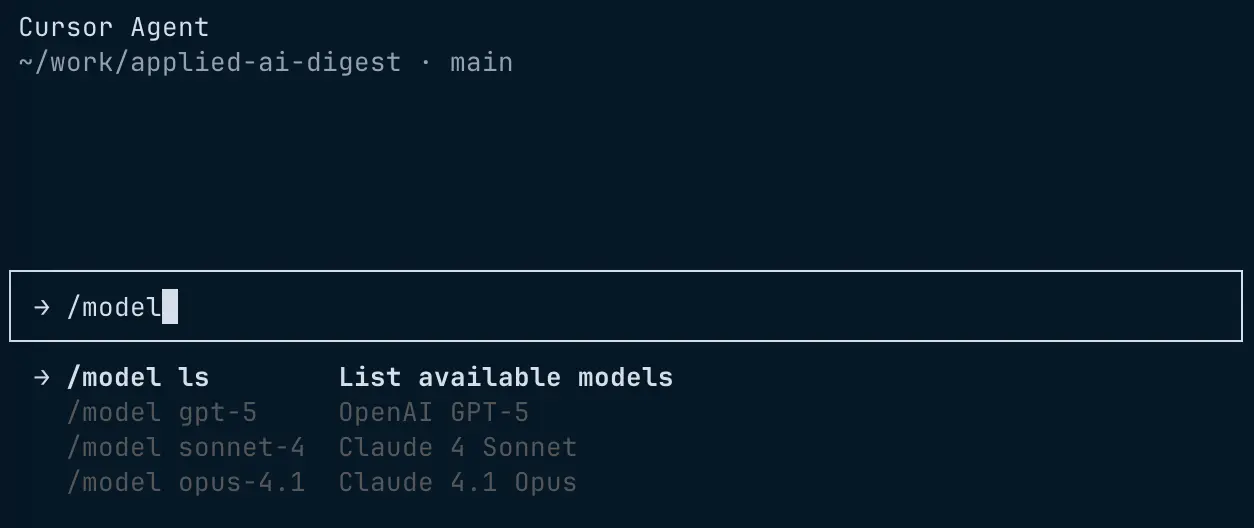

Cursor has released a CLI version of its agent for the terminal, JetBrains IDEs, Visual Studio, and CI/CD pipelines.

The CLI offers a different model set—Opus 4.1, GPT-5, and Claude Sonnet 4.0—and integrates with your Cursor subscription to give unified access to OpenAI and Anthropic models.

The tool is in beta, lacks some features found in Codex CLI and Claude Code, and its security guardrails are still evolving, so test in a restricted environment.

Source: https://cursor.com/blog/cli

_(1).png)

.png)