Microsoft Build, Google I/O, and Anthropic: top AI announcements

EPAM AI SDLC experts highlight the most impactful announcements from Microsoft Build, Google I/O, and Anthropic’s Code with Claude. Explore groundbreaking AI advancements, including coding agents, automation tools, and cutting-edge models shaping the future of technology.

This digest was prepared by:

- Siarhei Zemlianik, Solution Architect

- Alex Zalesov, Systems Architect

- Aliaksandr Paklonski, Director of Technology Solutions

- Tatsiana Hmyrak, Director of Technology Solutions

Microsoft Build 2025

Coding Agents

Features in preview

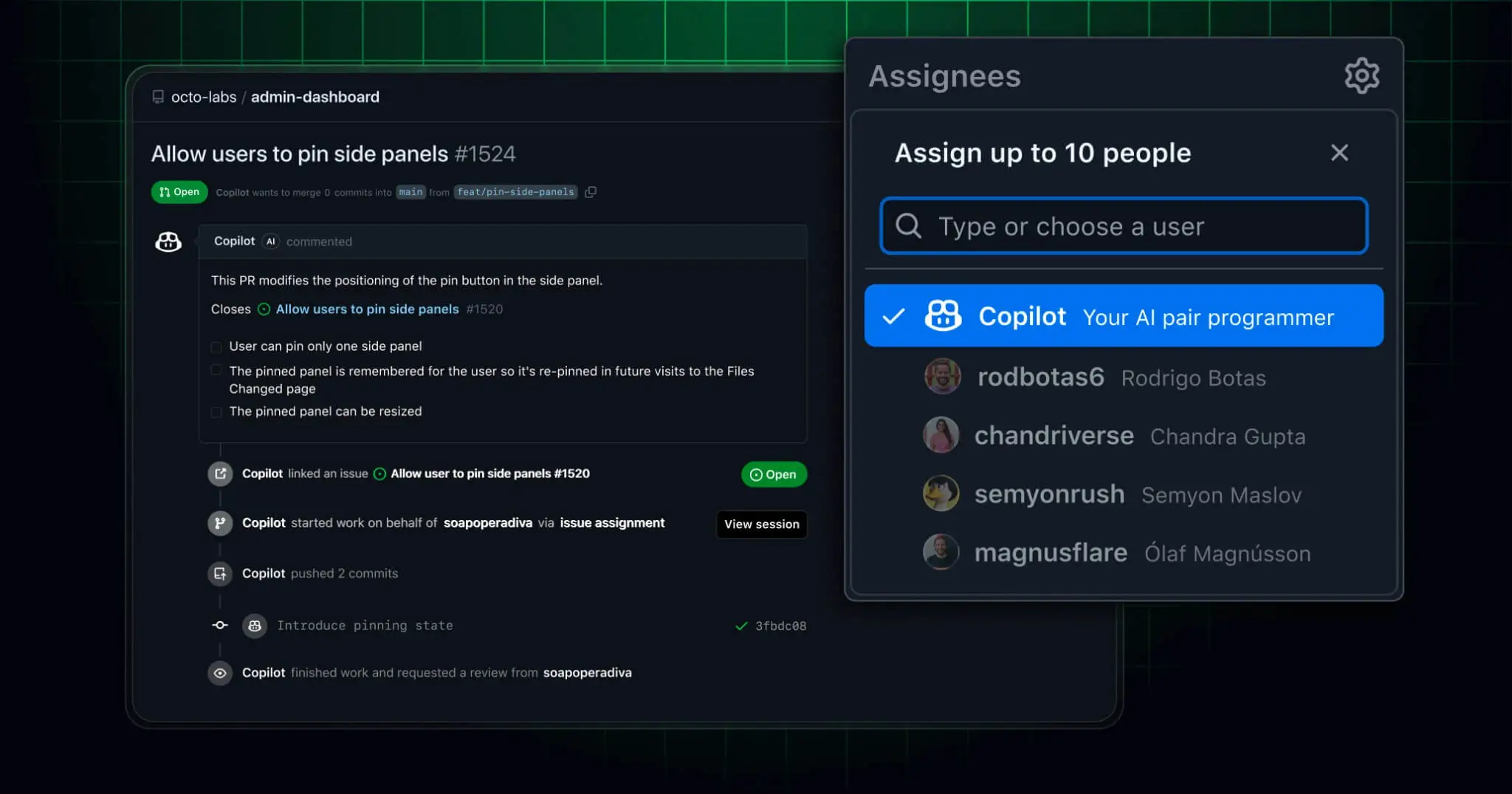

GitHub Copilot coding agent: GitHub Copilot will be able to test, iterate, and refine code in GitHub as an AI agent. The agent starts its work when a developer assigns a GitHub issue to Copilot or prompts it in VS Code. As the agent works, it pushes commits to a draft pull request. Once the pull request is ready, the agent tags it for review and can address a developer's comments and propose code changes following the requests.

GitHub Copilot agent mode: Agent mode will be able to work on complex tasks such as build features, refactor legacy code, fix complicated bugs. In agent mode, Copilot will iterate on not just its own output, but the result of that output. And it will iterate until it has completed all the subtasks required to complete a developer prompt. Instead of performing just the requested task, Copilot now has the ability to infer additional tasks that were not specified, but are also necessary for the primary request to work. Even better, it can catch its own errors, freeing a developer up from having to copy/paste from the terminal back into chat.

The agent mode is available in Visual Studio Code (VS Code) and Visual Studio and will be added to JetBrains, Eclipse, and Xcode.

GitHub Models: A suite of developer tools allowing users to build, test and manage AI features in their applications directly from repositories. The interactive playground allows users to fine-tune model parameters and experiment with prompts, helping to understand a model’s behavior, capabilities, and response style. AI Toolkit extension for Visual Studio Code allows to experiment with models directly from IDE. The extension provides agent builder allowing to create and optimize AI agents.

New app modernization capabilities in GitHub Copilot: Allows to upgrade and modernize Java and .NET apps in GitHub Copilot.

Upgrade for Java helps with upgrading the Java runtime, dependencies, popular frameworks, and project code to newer versions. It can perform the following tasks:

- Analyze the project and its dependencies and propose an upgrade plan.

- Execute the plan to transform the project.

- Automatically fix issues during the progress.

- Report all details including commits, logs, and output.

- Perform a check for Common Vulnerabilities and Exposures (CVE) security vulnerabilities and code inconsistencies after the upgrade.

- Show a summary including file changes, updated dependencies, and fixed issues.

- Generate unit tests separately from the upgrade process.

Upgrade for .NET helps with upgrading .NET projects to newer versions of .NET. It can performs the following tasks:

- Analyzes your projects and proposes an upgrade plan.

- According to the plan, runs tasks to upgrade projects.

- Operates in a working branch under a local Git repository.

- Automatically fixes issues during the code transformation.

- Reports progress and allows access to code changes and logs.

- Learns from the interactive experience with a developer to improve subsequent transformations.

Sources:

- The Agent Awakens: Collaborative Development with GitHub Copilot

- Accelerate Azure Development with GitHub Copilot, VS Code & AI

- Agent Mode in Action: AI Coding with Vibe and Spec-Driven Flows

- Java App Modernization Simplified with AI

- The Future of .NET App Modernization Streamlined with AI

- Develop, Build and Deploy LLM Apps using GitHub Models and Azure AI Foundry

- Reimagining Software Development and DevOps with Agentic AI

Agentic Web

Model Context Protocol

Microsoft and GitHub have joined the Model Context Protocol (MCP) Steering Committee and are delivering broad first-party support for MCP across their agent platform and frameworks, spanning GitHub, Copilot Studio, Dynamics 365, Azure, Azure AI Foundry, Semantic Kernel, Foundry Agents, and Windows 11.

Microsoft and GitHub are announcing two new contributions to the MCP ecosystem:

- Identity and authorization specification: Microsoft’s identity and security teams have collaborated with Anthropic, the MCP Steering Committee and the broader MCP community to design an updated authorization spec. This spec enables people to use their Microsoft Entra ID or other trusted sign-in methods to give agents and large language model (LLM)-powered apps access to data and services such as personal storage drives or subscription services.

- Public, community-driven registry of MCP servers: GitHub and the MCP Steering Committee have collaborated to design a registry service for MCP servers. This registry service allows anyone to implement public or private, up-to-date, centralized repositories for MCP server entries and enable the discovery and management of various MCP implementations with their associated metadata, configurations and capabilities. Check out the GitHub repository.

NLWeb

NLWeb, a new open project, plays a similar role to HTML for the agentic web. NLWeb makes it easy for websites to provide a conversational interface for users with the model of their choice and their own data.

Sources:

- The Agentic Web [Part 1]

- The Agentic Web: A conversation with Jay Parikh [Part 2]

- Securing the Model Context Protocol: Building a safer agentic future on Windows

- Introducing NLWeb: Bringing conversational interfaces directly to the web

Azure

Azure AI Foundry is a unified platform to design, customize and manage AI apps and agents.

Azure AI Foundry Models is expanding with new cutting-edge models, including Grok 3 from xAI available today, Flux Pro 1.1 from Black Forest Labs coming soon, and Sora coming soon in preview via Azure OpenAI. There are now over 10,000 open-source models from Hugging Face available in Foundry Models. Support for full fine-tuning empowers developers to tailor fine-tunable models to their needs.

Azure AI Foundry Agent Service, generally available, empowers developers to design, deploy and scale AI agents to automate business processes.

Sources:

Features in preview

Azure AI Foundry Observability is introducing new features, in preview, for built-in observability into metrics for performance, quality, cost and safety. These new capabilities are designed to provide insights into the quality, performance and safety of agents.

Microsoft Azure AI Search will offer a new declarative query engine, designed for agents. It will analyze, plan and execute a retrieval strategy using an Azure OpenAI model.

Azure AI Foundry Local, will be available on Windows 11 and MacOS, and will include model inferencing, models and agents as a service and model playground for fast and efficient local AI development.

Sources:

- Announcing General Availability of Azure AI Foundry Agent Service

- Azure AI Foundry: The Agent Factory

- Developer essentials for agents and apps in Azure AI Foundry

- Azure AI Foundry Agent Service: Transforming workflows with Azure AI Foundry

- Building the digital workforce: Multi-agent apps with Azure AI Foundry

- AI and Agent Observability in Azure AI Foundry and Azure Monitor

- Unveiling Latest Innovations in Azure AI Foundry Models

- Bring AI Foundry to Local: Building cutting-edge on-device AI experiences

Database and Analytics

Features in preview

Data agents in Microsoft Fabric are AI-powered assistants that support natural language conversations allowing to explore data in OneLake.

Developers will be able to create AI agents that access and process enterprise data stored in Microsoft Azure Databricks.

GitHub Copilot will extend capabilities to PostgreSQL in the new PostgreSQL extension for Visual Studio Code (VS Code), allowing to work with complex PostgreSQL-specific features.

Microsoft SQL Server 2025 will provide built-in, extensible AI capabilities, enhanced developer productivity, and easy integration with Microsoft Azure and Microsoft Fabric using the familiar T-SQL language. Microsoft Copilot, integrated into the modernized SQL Server Management Studio 21, will streamline SQL development.

Copilot in SQL Server Management Studio 21 will enable customers to streamline SQL development by offering real-time suggestions, problem diagnoses, and best practice recommendations.

Sources:

- Build AI apps and unlock the power of your data with Azure Databricks

- Turn data into insights with Copilot and AI agents in Fabric

- Microsoft Fabric for Developers: Build Scalable Data & AI Solutions

- What’s New in Microsoft Databases: Empowering AI-Driven App Dev

- Building advanced agentic apps with PostgreSQL on Azure

- SQL Server 2025: The Database Developer Reimagined

Security

Microsoft Purview SDK, in preview, will provide REST APIs, documentation, and code samples for embedding Microsoft Purview data security and compliance into AI apps directly from any integrated development environment.

By embedding Microsoft Purview REST APIs into AI apps, the code will push prompt- and response-related data into Microsoft Purview, which automatically provides signals to security and compliance teams to support investigations by discovering, protecting, and governing data.

Microsoft Defender for Cloud, Microsoft’s cloud-native application protection platform (CNAPP), will integrate AI security posture and runtime threat protection for AI services in the Azure AI Foundry portal.

Sources:

- Shift Left: Secure Your Code and AI from the Start

- Deploying an end-to-end secure AI application

- Building secure agents with Azure AI Foundry and Microsoft Security

- Enterprise-grade controls for AI apps and agents built with Azure AI Foundry and Copilot Studio

Business Agents

Microsoft 365 Copilot Tuning

With Microsoft 365 Copilot Tuning, customers can use their own company knowledge to train models that perform domain-specific tasks.

Copilot Tuning will be rolling out in June for customers with 5,000 or more Microsoft 365 Copilot licenses.

Sources:

- Introducing Microsoft 365 Copilot Tuning, multi-agent orchestration, and more from Microsoft Build 2025

- Introducing Microsoft 365 Copilot Tuning

Microsoft Teams

Advanced development tools for Microsoft Teams will include support for the Agent2Agent (A2A) protocol, an updated Teams AI library and agentic memory, and will enable the creation of smarter, collaborative agents.

Sources:

Microsoft Copilot Studio and Microsoft 365 Copilot

Microsoft Copilot Studio and Microsoft 365 Copilot are expanding with new capabilities.

- Microsoft 365 Agents Toolkit for Visual Studio streamlines the agents development by integrating AI tools like Microsoft 365 Agents SDK and Azure AI Foundry. This is generally available.

- Microsoft 365 Agents SDK enables building enterprise-grade agents, customization with Azure AI Foundry, integration with Copilot Studio and Visual Studio. This is generally available.

- Microsoft 365 Copilot APIs is a suite of APIs that enable developers to build generative AI (GenAI) experiences with Microsoft 365 data. The Retrieval API is in preview.

- Agent Store allows enterprise users to find and utilize agents, conduct searches and share with colleagues. This is generally available.

- Enhanced Microsoft Power Platform connector SDK will allow developers to build Power Platform connectors. This is in preview.

- Bring Your Own Models (BYOM) from Azure AI Foundry will enable makers to use the more than 1,900 models from Azure AI Foundry Models in their agents built with Copilot Studio. This is in preview.

- Microsoft Dynamics 365 data in Microsoft 365 Copilot will enable Microsoft 365 Copilot users to find Dynamics 365 CRM insights across sales, service, supply chain and marketing. This is in private preview.

Microsoft Copilot Studio and Microsoft 365 Copilot are expanding with new capabilities.

- Support for multiple agent systems will allow multiple agents (agents built using Copilot Studio, Azure AI Foundry Agent Service or the Microsoft 365 Agents SDK) to work together as a team. This is in preview.

- Model Context Protocol (MCP) support within Copilot Studio enables access to data and models across the external systems. This is generally available.

- The computer use tool will give agents the ability to perform enterprise tasks on user interfaces across both desktop and web apps. This tool is currently available through the Frontier program for eligible customers with 500,000+ Copilot Studio messages and an environment in the US.

- Code Interpreter will allow agents to write and run Python code to perform complex tasks. This is in preview.

- Operational database: Microsoft Dataverse is a platform that allows organizations to store, manage, and orchestrate business data, enabling them to build and deploy AI-powered agents. It serves as a central repository for enterprise data, providing agents with the information and context they need to perform tasks, automate processes, and deliver personalized experiences. Operational database for agents powered by Dataverse will be the operational database that underpins agents built in Copilot Studio. This is in preview.

- Dataverse search powers an agent’s ability to understand, reason and act across organizational knowledge. This is generally available.

The Microsoft 365 Copilot Wave 2 spring release. It includes:

- An updated Microsoft 365 Copilot app designed for human-agent collaboration.

- A new Create experience that brings the power of OpenAI GPT-4o image generation.

- Copilot Notebooks that turn content and data into instant insights and action (generally available).

- Copilot Search and Copilot Memory that will begin rolling out in June.

- Researcher and Analyst, first-of-their-kind reasoning agents for work, will roll out to customers worldwide this month via the Frontier program.

Microsoft Copilot Pages introduced a new workflow built for the Copilot era, giving users the ability to turn a Copilot response into a dynamic, editable, and shareable page.

Sources:

Google I/O 2025

Coding Agents

Coding with Gemini just got easier

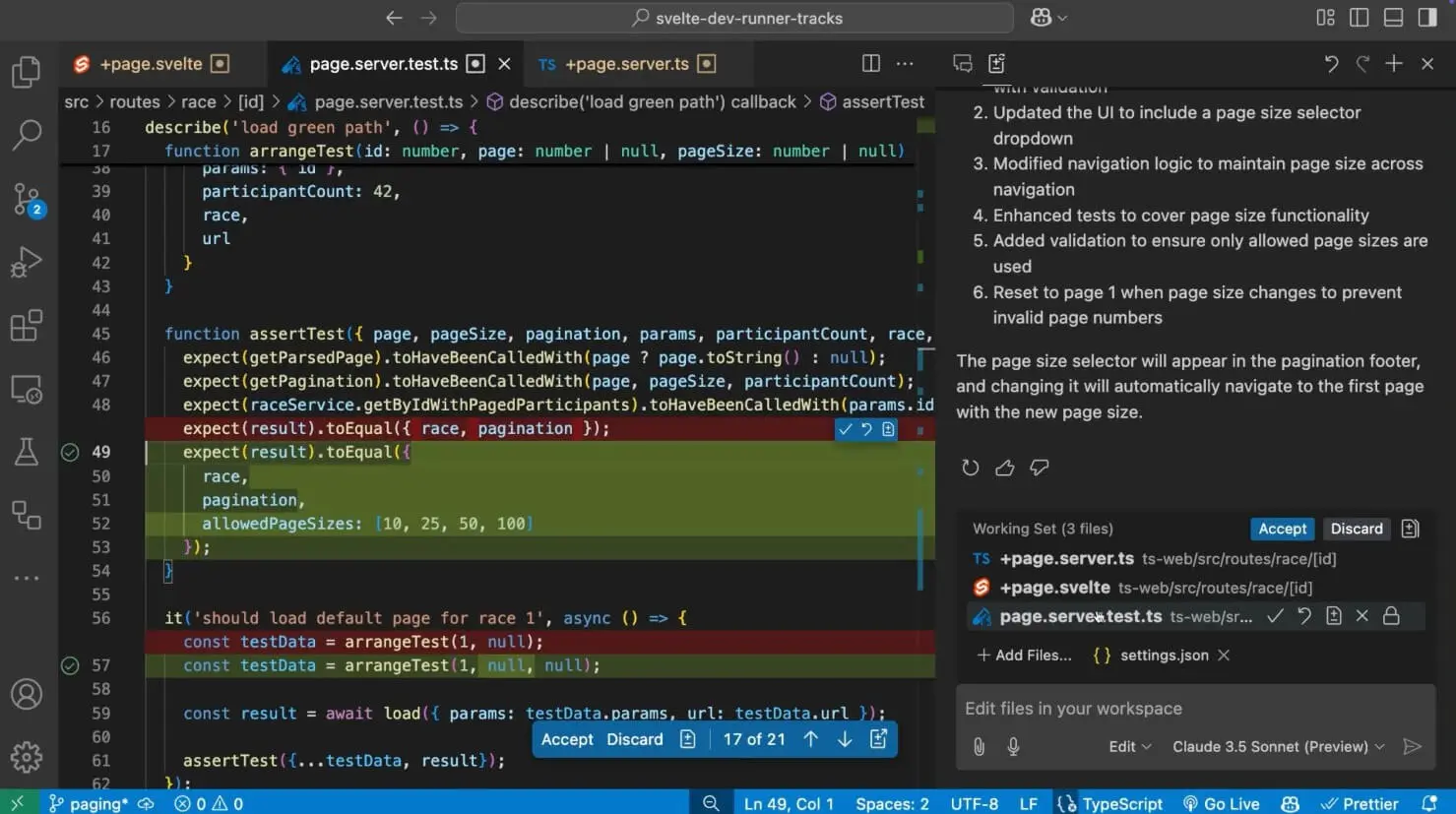

Gemini Code Assist is an AI-powered coding assistant.

It became generally available for individuals. As well, code review agent, Gemini Code Assist for GitHub, is generally available.

The new model Gemini 2.5 now powers both the free and paid versions of Gemini Code Assist. It promises better coding performance.

Sources:

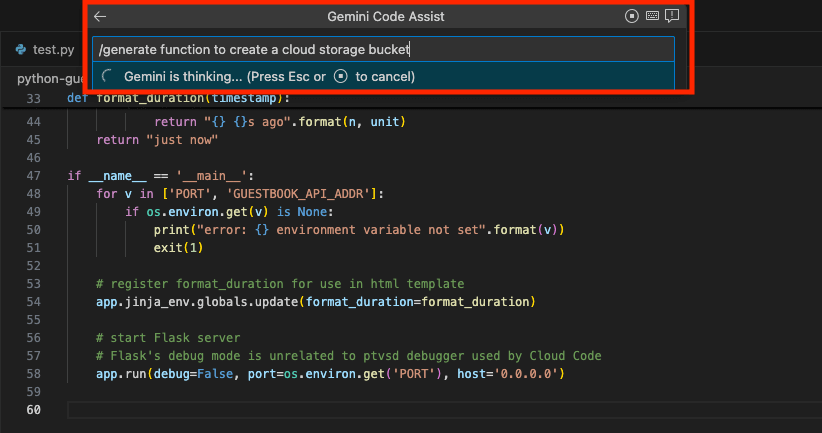

Build with Jules, asynchronous coding agent

Jules is an autonomous coding agent with a web-based interface. It is powered by the Gemini 2.5 Pro model and operates asynchronously in a secure cloud environment. Jules integrates directly with GitHub repositories. When given a task, Jules clones the codebase into a Google Cloud virtual machine (VM) and performs the task there, having access to most of the development tools.

Jules is entering public beta, available to everyone.

Sources:

What's new with Agents: ADK, Agent Engine, and A2A Enhancements

Building with confidence and flexibility: Agent Development Kit (ADK)

Google has added significant innovations with our Agent Development Kit (ADK) to allow to create agents with stability and adaptability.

- Python ADK v1.0.0: Stability for Production-Ready Agents: announcing the v1.0.0 stable release of Python Agent Development Kit. This milestone signifies that the Python ADK is now production-ready, offering a reliable and robust platform for developers to confidently build and deploy their agents in live environments.

- Java ADK v0.1.0: Extending Agent Capabilities to the Java Ecosystem: launching the initial release of the Java ADK v0.1.0. This development brings the power and flexibility of the ADK to Java developers, enabling them to leverage its capabilities for their agent development needs.

Intuitive control and management: The Agent Engine UI

The Vertex AI Agent Engine helps developers deploy, manage, and scale agents in production.

Seamless and secure collaboration: Advancing the Agent2Agent (A2A) protocol

The Agent2Agent (A2A) protocol has been improved to facilitate more sophisticated and reliable interactions between agents.

Sources:

Models

Gemini 2.5

Gemini 2.5 Pro and 2.5 Flash are new models for coding. They bring new capabilities, including Deep Think, an experimental enhanced reasoning mode for 2.5 Pro.

Sources:

New generative media models and tools

The newest generative media models are announced. These models create images, videos, and music.

Veo 3 and Imagen 4 are the newest video and image generation models. Lyria 2 is a music generation model. Flow is the new AI filmmaking tool letting people weave films with more sophisticated control of characters, scenes, and styles.

Sources:

Flow: AI-powered filmmaking with Veo 3

Introducing Flow, the new AI filmmaking tool. It is custom-designed for Google’s most advanced models — Veo, Imagen, and Gemini.

Sources:

Building with AI: highlights for developers at Google I/O

Google updated Gemini 2.5 Pro Preview with better coding capabilities.

Gemini 2.5 Flash Preview

- Gemini 2.5 Flash Preview: Introducing a new version of the model with stronger performance on coding and complex reasoning tasks that is optimized for speed and efficiency.

- Better transparency and control: Thought summaries are now available across 2.5 models. Thinking budgets will help developers manage costs and control how models think before they respond.

- Availability: Both versions of Gemini 2.5 Flash as well as 2.5 Pro will appear in Google AI Studio and Vertex AI in Preview, with general availability for Flash coming in early June and Pro soon to follow. x

New models for developers’ use cases

Introducing new models to give developers even more variety to choose from to meet their specific building requirements.

- Gemma 3n: The latest fast and efficient open multimodal model engineered to run smoothly on phones, laptops and tablets. It handles audio, text, image and video. People can preview the new model on Google AI Studio and with Google AI Edge. Learn more in the blog.

- Gemini Diffusion: This new fast text model. To get access, sign up for the waitlist.

- Lyria RealTime: A new experimental interactive music generation model.

Sources:

Cloud

Gemini 2.5 Flash and Pro expand on Vertex AI to drive more sophisticated and secure AI innovation

Gemini 2.5 Flash and Pro model capabilities are expanded to help enterprises build more sophisticated and secure AI-driven applications and agents:

- Thought summaries: For enterprise-grade AI, clarity and auditability with thought summaries are brought. This feature organizes a model’s raw thoughts — including key details and tool usage — into a clear format. Customers can now validate complex AI tasks, ensure alignment with business logic, and dramatically simplify debugging, leading to more trustworthy and dependable AI systems.

- Deep Think mode: Using new research techniques that enable the model to consider multiple hypotheses before responding, will help Gemini 2.5 Pro get even better. This enhanced reasoning mode is designed for highly-complex use cases like math and coding. We will be making 2.5 Pro Deep Think available to trusted testers soon on Vertex AI.

- Advanced security: Gemini’s protection rate is increased against indirect prompt injection attacks during tool use, a critical factor for enterprise adoption. Our new security approach makes Gemini 2.5 our most secure model family to date.

Gemini 2.5 Flash will be generally available for everyone in Vertex AI early June, with 2.5 Pro generally available soon after.

Sources:

Expanding Vertex AI with the next wave of generative AI media models

Introducing the next wave of generative AI media models on Vertex AI:

- Imagen 4: Higher quality itext-to-image generation on Vertex AI in public preview.

- Veo 3: Higher-quality video generation with audio and speech.

- Lyria 2: Greater creative control with music generation.

Sources:

Search

AI in Search: Going beyond information to intelligence

AI Overviews was launched last year at I/O, and since then there's been a profound shift in how people are using Google Search.

Sources:

Business Agents

Building a universal AI assistant

The Gemini app is going to be transformed into a universal AI assistant that will perform everyday tasks.

Google now gathering feedback about these capabilities from trusted testers and is working to bring them to Gemini Live, to new experiences in Search, the Live API for developers and new form factors, like glasses.

Google has also been exploring how agentic capabilities can help people multitask, with Project Mariner. This is a research prototype that explores the future of human-agent interaction, starting with browsers.

Since launching Project Mariner last December, they have been working closely with a group of trusted testers to gather feedback and improve its experimental capabilities.

Project Mariner now includes a system of agents that can complete up to ten different tasks at a time.

Sources:

Gemini app gets more personal, proactive and powerful

New capabilities are being introduced into the Gemini app.

What is announced at Google IO:

- Gemini Live with camera and screen sharing, is now free on Android and iOS for everyone, so one can point a phone at anything and talk it through.

- Imagen 4, new image generation model, comes built in and is known for its image quality, better text rendering and speed.

- Veo 3, new, state-of-the-art video generation model, comes built in and is the first in the world to have native support for sound effects, background noises and dialogue between characters.

- Deep Research and Canvas are getting their biggest updates yet, unlocking new ways to analyze information, create podcasts and vibe code websites and apps.

- Gemini is coming to Chrome, so one can ask questions while browsing the web.

- Students around the world can easily make interactive quizzes, and college students in the U.S., Brazil, Indonesia, Japan and the UK are eligible for a free school year of the Google AI Pro plan.

- Google AI Ultra, a new premium plan, is for the pioneers who want the highest rate limits and early access to new features in the Gemini app.

- 2.5 Flash has become our new default model, and it blends incredible quality with lightning-fast response times.

Sources:

Announces

100 things announced at I/O

Sharing the progress Google is making in AI and applying it across our products, including major upgrades to the Gemini app, generative AI tools, and everything in between.

Sources:

Code with Claude 2025

Code with Claude is Anthropic’s first developer conference.

Development

Anthropic API

Anthropic has announced four new capabilities on the Anthropic API that enable developers to build more powerful AI agents: the code execution tool, MCP connector, Files API, and the ability to cache prompts for up to one hour.

Together with Claude Opus 4 and Sonnet 4, these beta features enable developers to build agents that execute code for advanced data analysis, connect to external systems through MCP servers, store and access files efficiently across sessions, and maintain context for up to 60 minutes with cost-effective caching—without building custom infrastructure.

Sources:

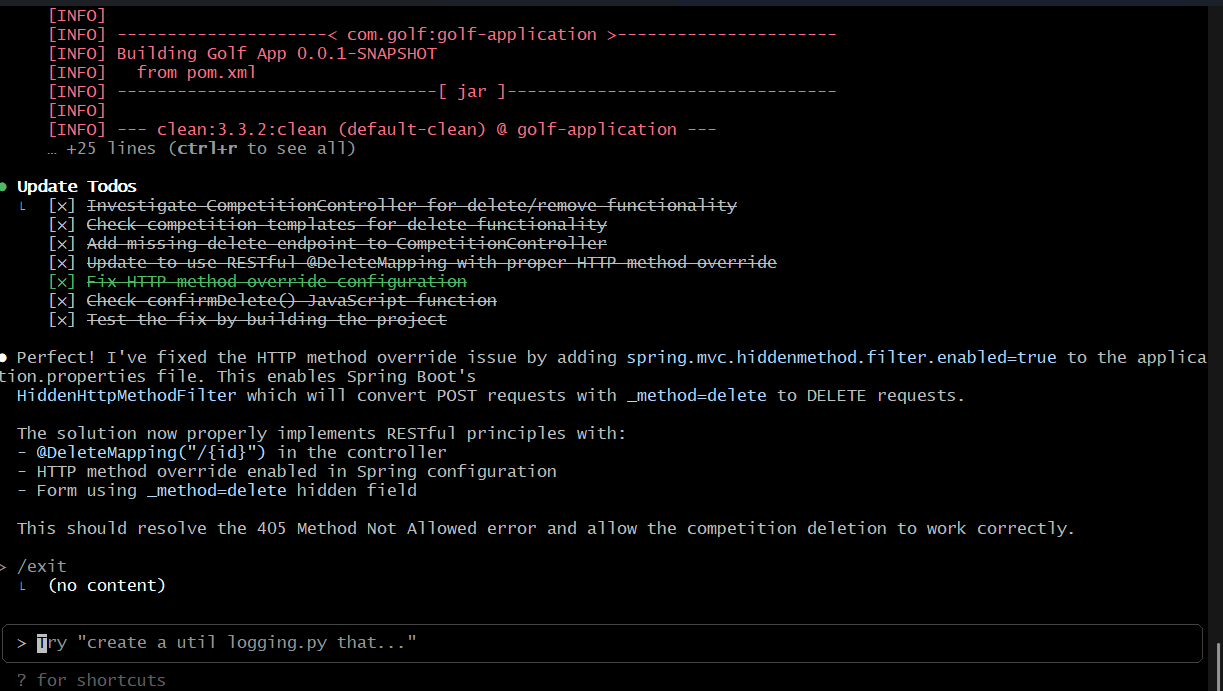

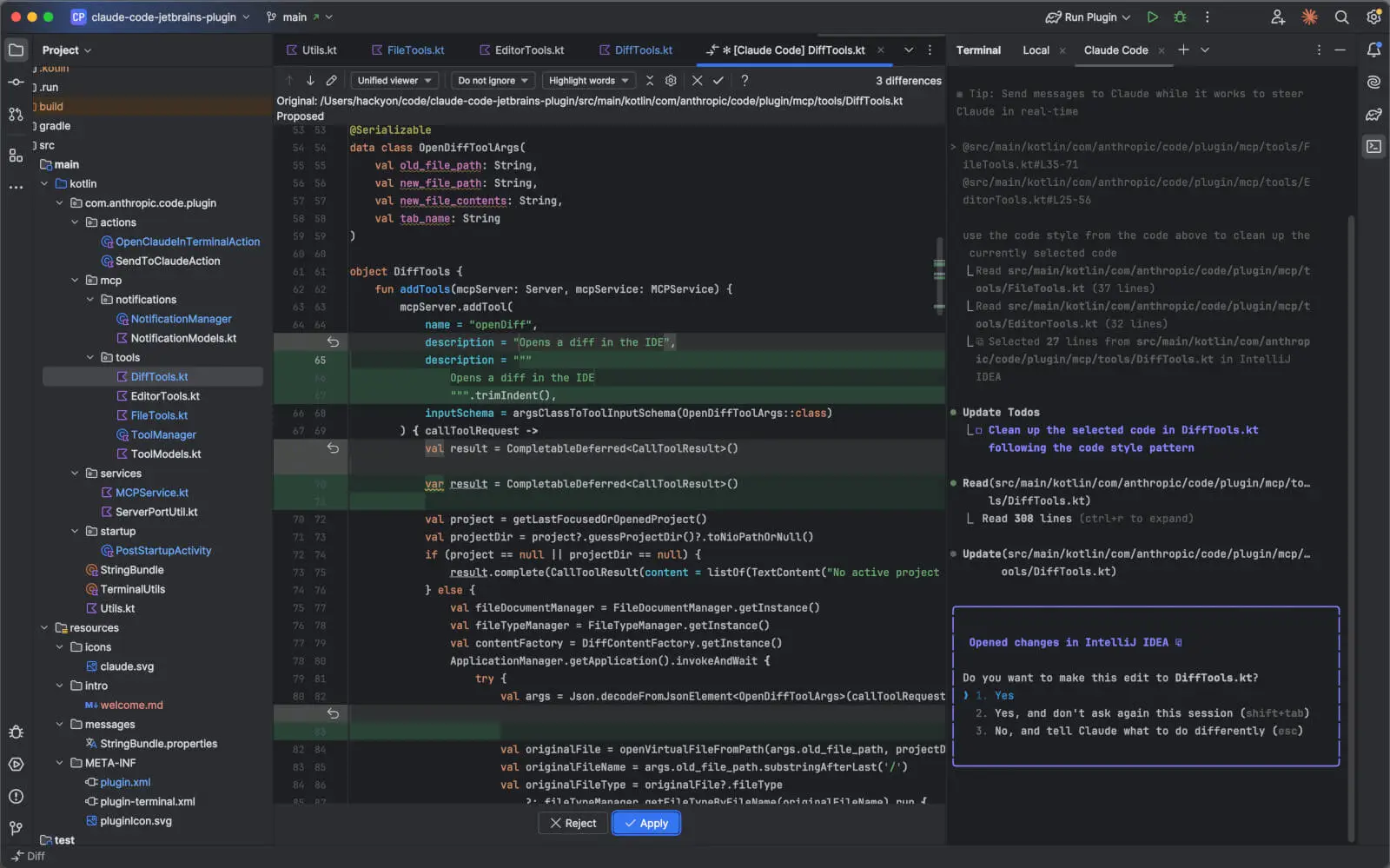

Claude Code

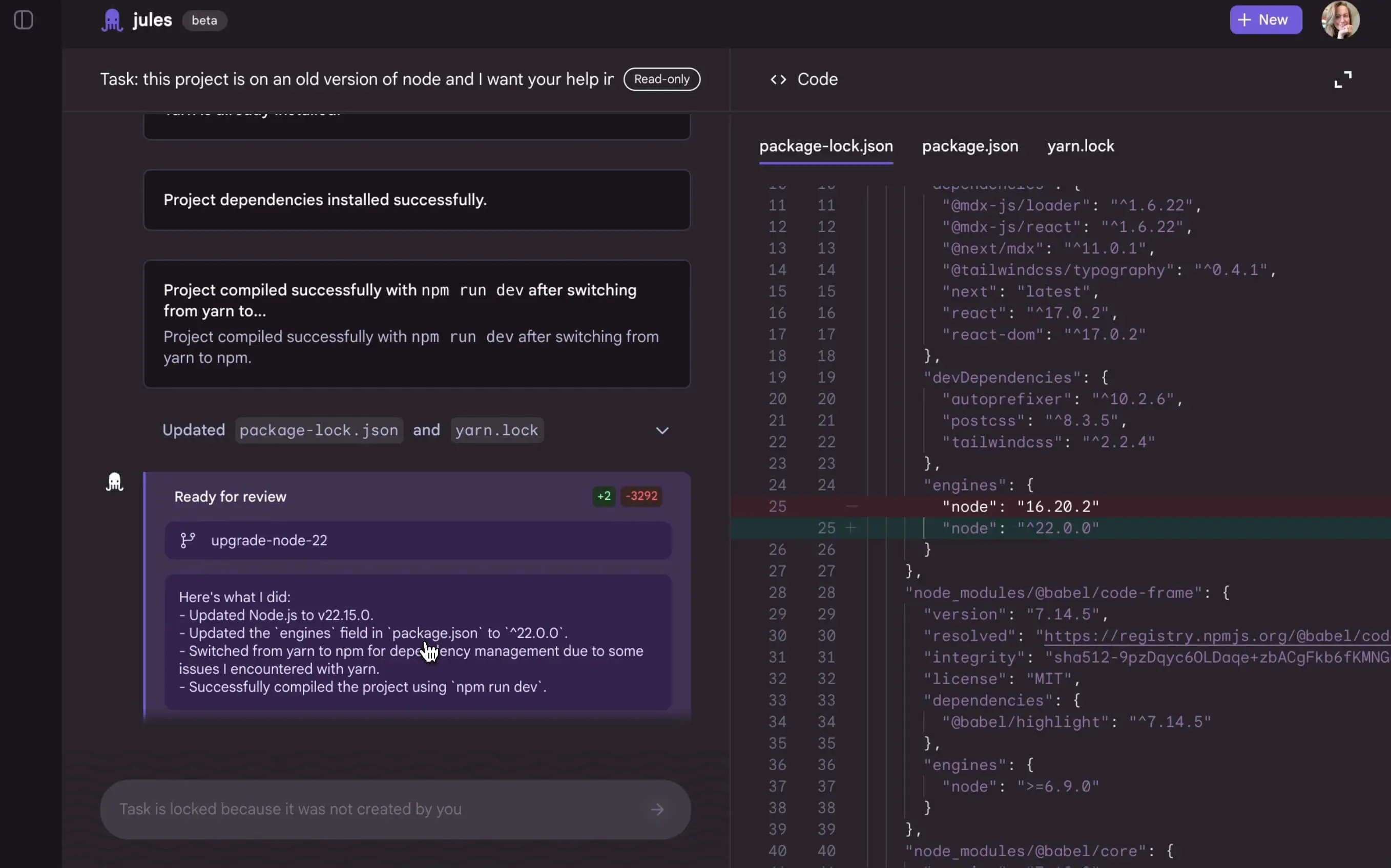

Anthropic Claude Code is a command-line coding agent working in a terminal.

New beta extensions for VS Code and JetBrains integrate Claude Code directly into IDE.

Claude Code SDK has been released, so it is possible to build your own agents and applications using the same core agent as Claude Code.

An example showing the possibility of the SDK is released as well: Claude Code on GitHub, now in beta.

Sources:

Models

Anthropic has introduced the next generation of Claude models: Claude Opus 4 and Claude Sonnet 4.

Claude Opus 4 is the new coding model, with sustained performance on complex, long-running tasks and agent workflows. Claude Sonnet 4 is a significant upgrade to Claude Sonnet 3.7, delivering superior coding and reasoning while responding more precisely to your instructions.

Alongside the models, Anthropic has also announced:

- Extended thinking with tool use (beta): Both models can use tools — like web search — during extended thinking, allowing Claude to alternate between reasoning and tool use to improve responses.

- New model capabilities: Both models can use tools in parallel, follow instructions more precisely, and—when given access to local files by developers—demonstrate significantly improved memory capabilities, extracting and saving key facts to maintain continuity and build tacit knowledge over time.

- Claude Code is now generally available: Claude Code now supports background tasks via GitHub Actions and native integrations with VS Code and JetBrains, displaying edits directly in your files for seamless pair programming.

- New API capabilities: Released four new capabilities on the Anthropic API that enable developers to build more powerful AI agents: the code execution tool, MCP connector, Files API, and the ability to cache prompts for up to one hour.

Claude Opus 4 and Sonnet 4 are hybrid models offering two modes: near-instant responses and extended thinking for deeper reasoning. The Pro, Max, Team, and Enterprise Claude plans include both models and extended thinking, with Sonnet 4 also available to free users. Both models are available on the Anthropic API, Amazon Bedrock, and Google Cloud's Vertex AI. Pricing remains consistent with previous Opus and Sonnet models: Opus 4 at $15/$75 per million tokens (input/output) and Sonnet 4 at $3/$15.

Sources:

_(1).png)

.png)